What Meta’s SAM Audio Release Means for Music Stem Separation & What Actually Matters in Practice

Meta recently introduced SAM Audio, a new AI model for sound segmentation. LALAL.AI, featured in Meta’s official benchmarking, explores what SAM Audio means for professional music stem separation

Meta recently introduced SAM Audio, a new research model designed to segment sound using text, visual, and temporal prompts. It’s an ambitious extension of the Segment Anything concept into the audio domain, and an important signal that audio separation is becoming a central topic in AI research.

At LALAL.AI, we closely follow developments like this, not just because they push the field forward, but because they help clarify what truly matters in real-world audio workflows. Notably, LALAL.AI was included by Meta as one of the reference solutions in their official benchmarking, alongside other well-known commercial services.

So what does SAM Audio actually change (and what doesn’t) when it comes to professional music stem separation?

A Strong Research Model & Clear Research Focus

SAM Audio is a unified, multimodal, generative model. It can separate sounds based on natural prompts like “guitar,” “voice,” or “background noise,” and even use visual cues from video to guide separation. From a research perspective, this is a meaningful step forward, especially for open-vocabulary and multimodal audio understanding.

However, it’s important to separate research capability from production usability.

At this point, SAM Audio is not a ready-to-use product, since running the model requires:

- High-end, expensive professional hardware (NVIDIA A100 GPUs, costing around $20K),

- significant engineering expertise,

- and considerable processing time, even on that hardware.

According to Meta’s own results, SAM Audio processes audio at approximately 0.7× real time on an A100, meaning 10 seconds of audio require around 7 seconds of processing on one of the most expensive accelerators available today.

This is a perfectly reasonable trade-off for a large-scale research model but it also defines where SAM Audio fits best.

By contrast, at LALAL.AI we cater to all our audiences, from hobbyists using our mobile app to extract karaoke tracks, to professionals employing our plugin in complex mastering setups. As such, we’ve launched a VST plugin that can run on the same equipment our users typically work with for audio production.

Why Professional Music Separation Has Different Constraints

Universal, prompt-driven models like SAM Audio are designed to solve a very broad task: separating any sound from any mixture, often in complex, real-world environments.

Professional stem separation is a narrower, and in many ways stricter problem.

High-quality music separation requires:

- preserving stereo width and spatial imaging,

- maintaining reverbs, delays, and subtle ambience,

- avoiding phase issues and artificial artifacts,

- and ensuring the result faithfully represents the original mix.

SAM Audio processes audio in mono, which already places fundamental limits on how well stereo information and spatial effects can be preserved. For creative music production, remixing, mastering, or archival work, these details are not optional; they define the quality of the result.

How LALAL.AI Performed in Meta’s Benchmark

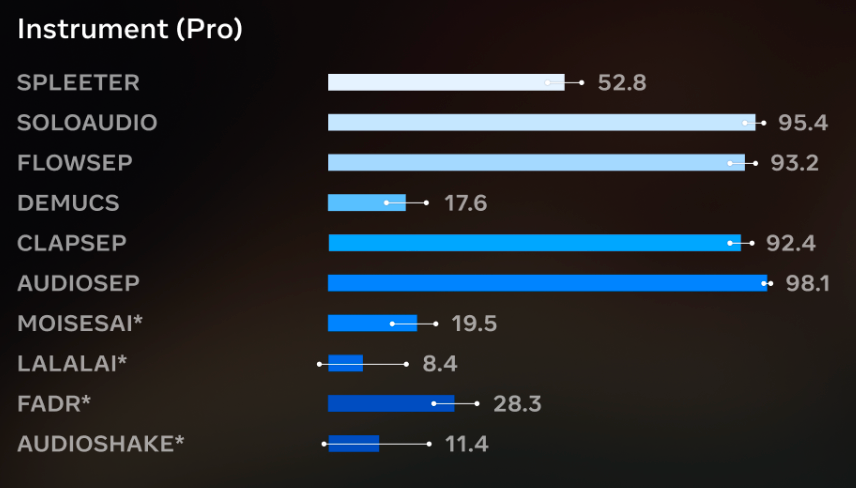

In Meta’s published benchmarks, LALAL.AI was evaluated as a commercial reference alongside services such as Moises, AudioShake, FADR, and others.

In the instrument separation category (which includes vocals) SAM Audio achieved the highest overall scores, but LALAL.AI shows the smallest performance gap to SAM Audio among commercial solutions in professional instrument separation.

At LALAL.AI, our stem separation models are built on transformer-based architectures specifically designed for audio. Unlike general-purpose generative models, our transformers are targeted and discriminative: they focus on separating instruments, vocals, and other stems faithfully, preserving stereo, timbre, and subtle musical nuances. By modeling long-range dependencies across the entire track, our transformers capture the structure of music (such as phrasing, harmonics, reverb tails) allowing us to deliver high-quality stems suitable for professional workflows, from mixing and mastering to remixing.

Generative models like Meta’s SAM Audio use diffusion transformers, which excel in open-domain, multimodal audio separation. These models can respond to text, visual, and temporal prompts and isolate almost any sound. However, for professional music stem separation, diffusion transformers face inherent limitations: the generative process can alter the original sound, affect amplitude and stereo information, and introduce artifacts, which is unacceptable for studio-quality work. In contrast, targeted transformer models like ours are non-generative, ensuring that every separated stem is a faithful representation of the original mix — fast, reliable, and fully production-ready.

In other words, when it comes specifically to professional-grade stem separation, LALAL.AI remains a production-ready solution for professional stem separation, combining top-tier quality with the speed, accessibility, and reliability required in real-world workflows.

Speed and Accessibility Matter in Practice

Benchmark scores are important but performance in real workflows matters just as much.

LALAL.AI is designed to process audio fast, scale efficiently without specialized hardware, and remain accessible to musicians, producers, DJs, and audio engineers without ML infrastructure.

For most creators, the question isn’t whether a model can theoretically separate any sound; it’s whether the tool can deliver high-quality stems quickly, reliably, and today.

It’s also worth acknowledging that universal models like SAM Audio can excel in areas such as noisy, in-the-wild recordings, unstructured audio, speech-heavy or multimodal scenarios.

These are valuable capabilities but they represent a different class of problem than professional music stem separation, where the source material itself is typically high quality and the expectations for output fidelity are much higher.

Our Focus Going Forward

Meta’s SAM Audio is an exciting research milestone, and it highlights how rapidly audio AI is developing. We’re glad to see the field gaining this level of attention and proud that LALAL.AI was included as a reference point in this work.

At the same time, our mission remains unchanged. LALAL.AI is built for high-quality music stem separation that is fast, accessible, faithful to the original sound, and ready for real creative professional workflows.

Research models expand what’s possible, while production tools define what’s usable. And that’s the space we continue to focus on.

Follow LALAL.AI on Instagram, Facebook, Twitter, TikTok, Reddit, LinkedIn, and YouTube to keep up with all our updates and special offers.